Interactive and Visual Prompt Engineering for Ad-hoc Task Adaptation with Large Language Models

Hendrik Strobelt, Albert Webson, Victor Sanh, Benjamin Hoover, Johanna Beyer, Hanspeter Pfister, Alexander M. Rush

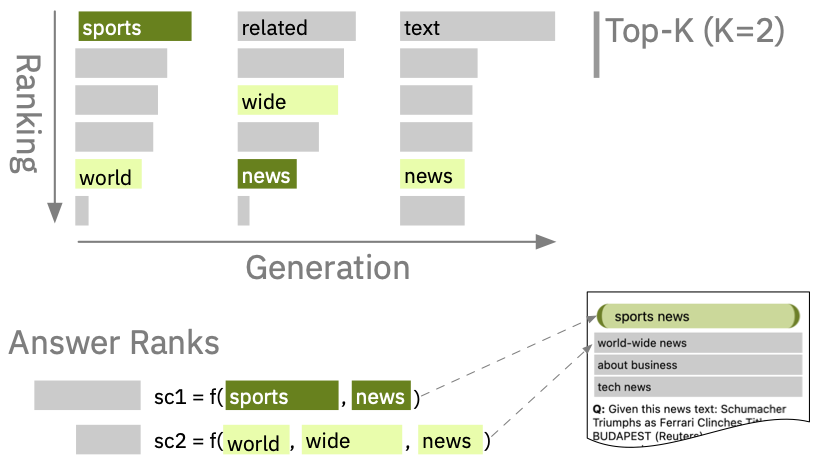

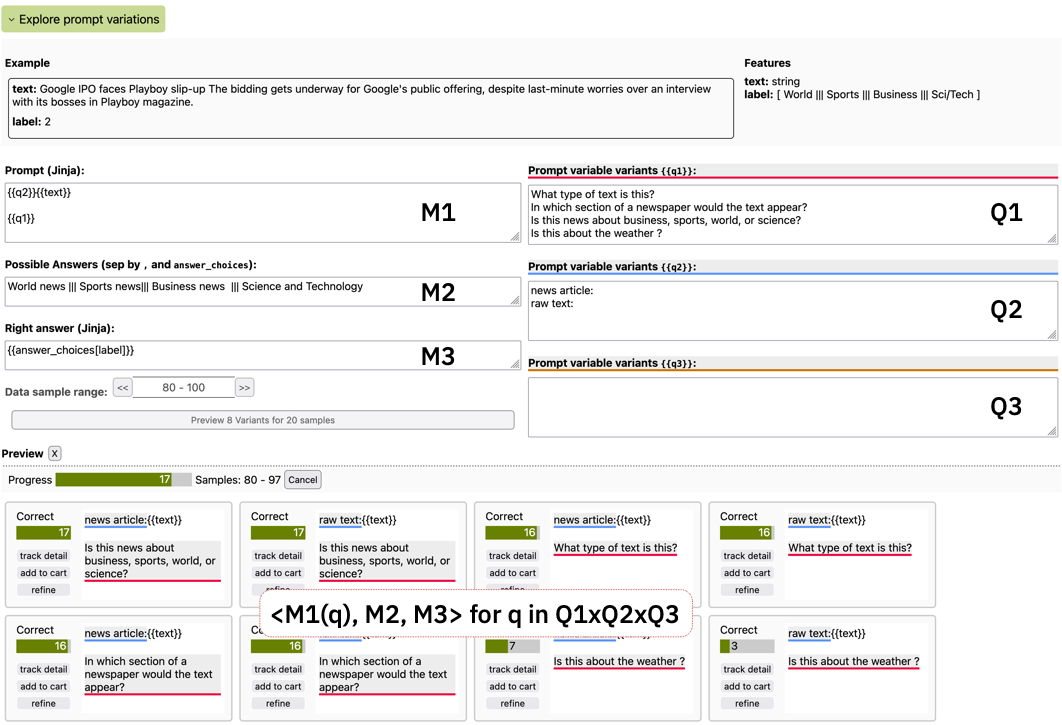

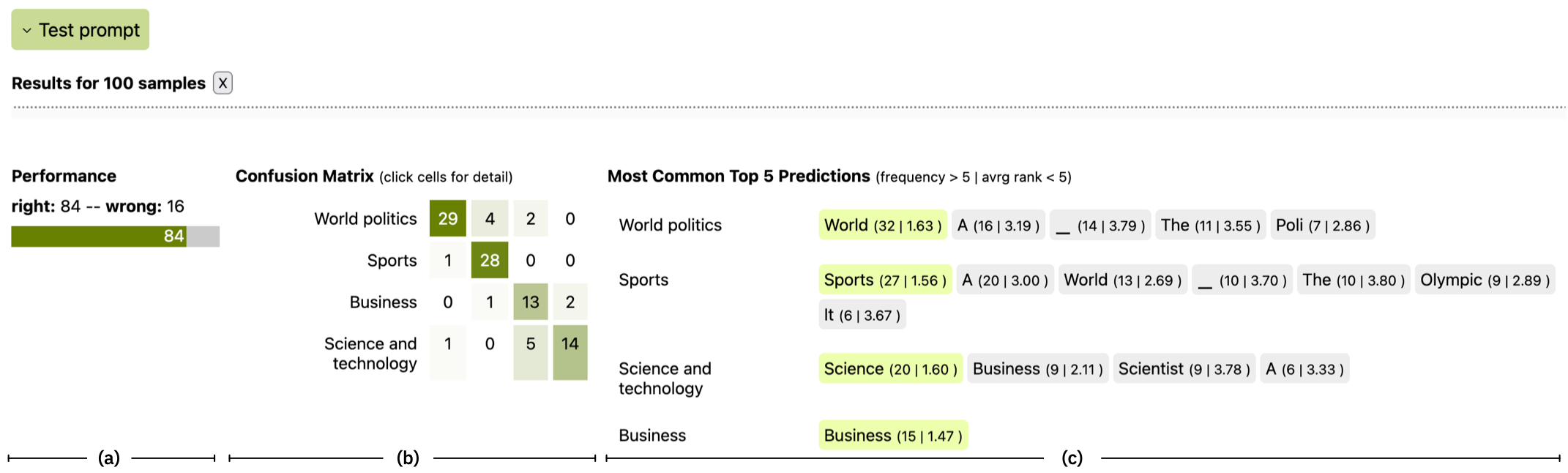

State-of-the-art neural language models can now be used to solve ad-hoc language tasks through zero-shot prompting without the need for supervised training. This approach has gained popularity in recent years, and researchers have demonstrated prompts that achieve strong accuracy on specific NLP tasks. However, finding a prompt for new tasks requires experimentation. Different prompt templates with different wording choices lead to significant accuracy differences. PromptIDE allows users to experiment with prompt variations, visualize prompt performance, and iteratively optimize prompts. We developed a workflow that allows users to first focus on model feedback using small data before moving on to a large data regime that allows empirical grounding of promising prompts using quantitative measures of the task. The tool then allows easy deployment of the newly created ad-hoc models. We demonstrate the utility of PromptIDE and our workflow using several real-world use cases. For a quick introduction, check out the video below and try out our simplified demo.

Citation

@misc{https://doi.org/10.48550/arxiv.2208.07852,

doi = {10.48550/ARXIV.2208.07852},

url = {https://arxiv.org/abs/2208.07852},

author = {Strobelt, Hendrik and Webson, Albert and Sanh, Victor and Hoover, Benjamin and Beyer, Johanna and Pfister, Hanspeter and Rush, Alexander M.},

keywords = {Computation and Language (cs.CL), Human-Computer Interaction (cs.HC), Machine Learning (cs.LG), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Interactive and Visual Prompt Engineering for Ad-hoc Task Adaptation with Large Language Models},

publisher = {arXiv},

year = {2022}

}